Ieri: il control plane GitHub Actions costa $0.002/min anche con runner self-hosting.

Oggi (Jared Palmer):

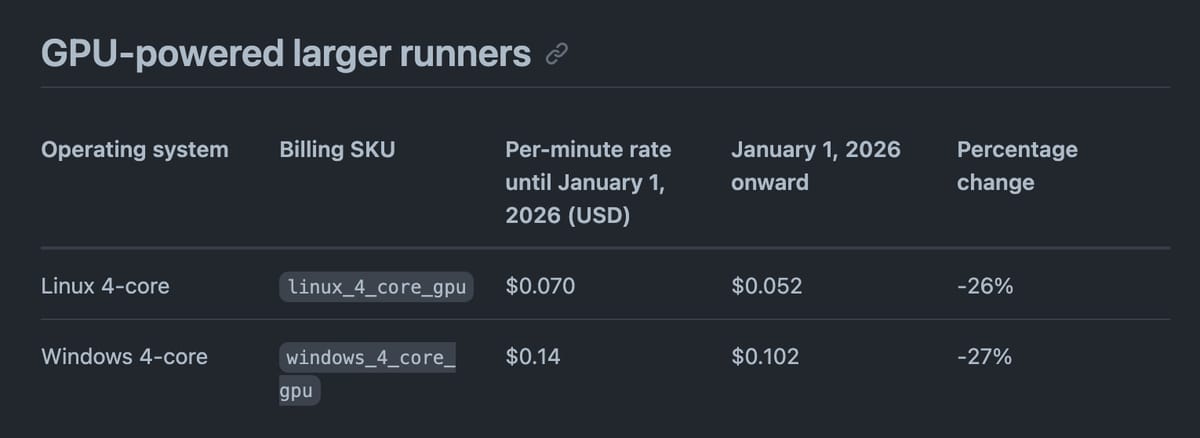

We’re postponing the announced billing change for self-hosted GitHub Actions. The 39% price reduction for hosted runners will continue as planned (on January 1)

We missed the opportunity to gather feedback from the community ahead of this move. That's a huge L. We'll learn and do better in the future.

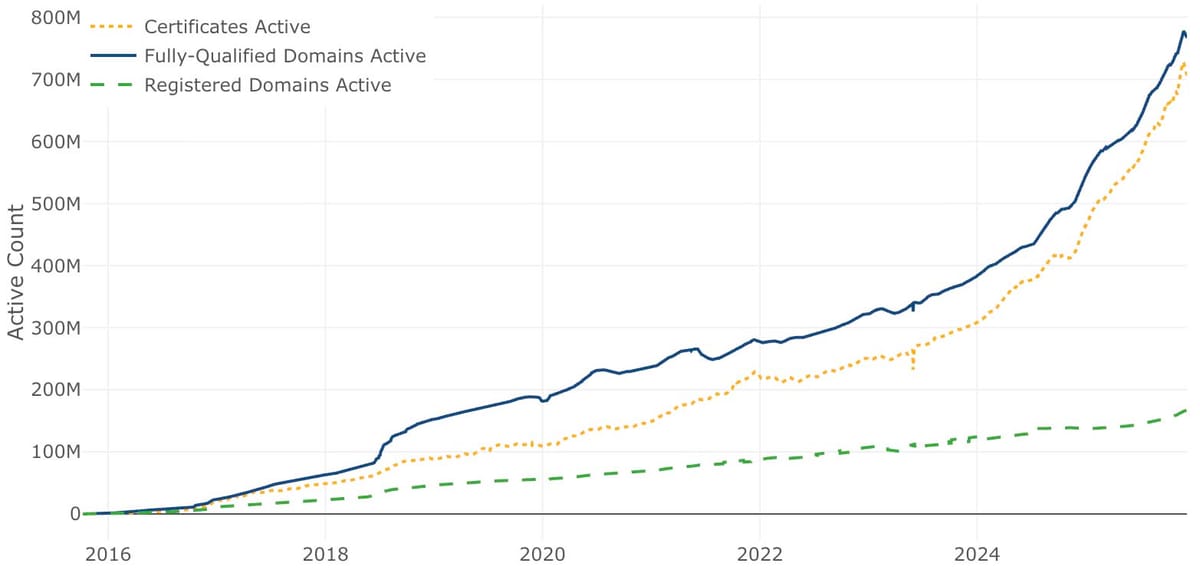

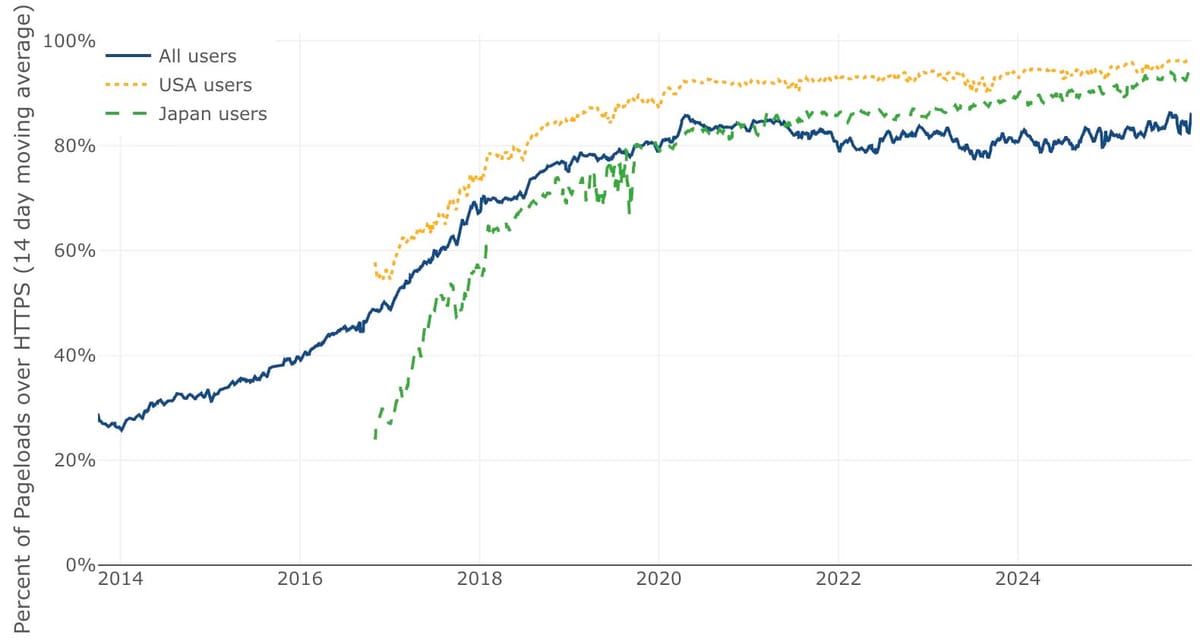

Actions is critical infrastructure for millions of developers and we're committed to making it a world‑class compute product. Although we gave away 11.5 billion build minutes (~$184 million) to support OSS last year, Actions itself is not free. There are real, web-scale costs associated with the service and behind the control plane (for logs, artifacts, caching, redis, egress, engineering, support, etc) for both hosted and self-hosted runners. We eventually need to find a way to price it properly while also partnering and fostering the rest of the ecosystem. However, we clearly missed some steps here, and so we’re correcting course.

You all trust Actions with your most important workflows, and that trust comes with a responsibility we didn't live up to. The way forward is to listen more, ship with the community, and raise the bar together.